Sensing

Pervasive sensing is the primary focus of our research group. We sense everything, humans and the environment, and with different modalities, like the locomotive, acoustic, RF, medical, etc., under different infrastructures, like active and passive sensing, wearables, fixed-infrastructure, sensing, etc. The objective is to develop ubiquitous applications that can be leveraged through low-cost sensing infrastructure. We use different technologies, like signal processing, machine learning, deep learning, etc., from the algorithmic perspective to meet our goals. Some of our recent exciting projects are as follows.

Passive Sensing: Using RF to Sense Human, Environment and Conexts

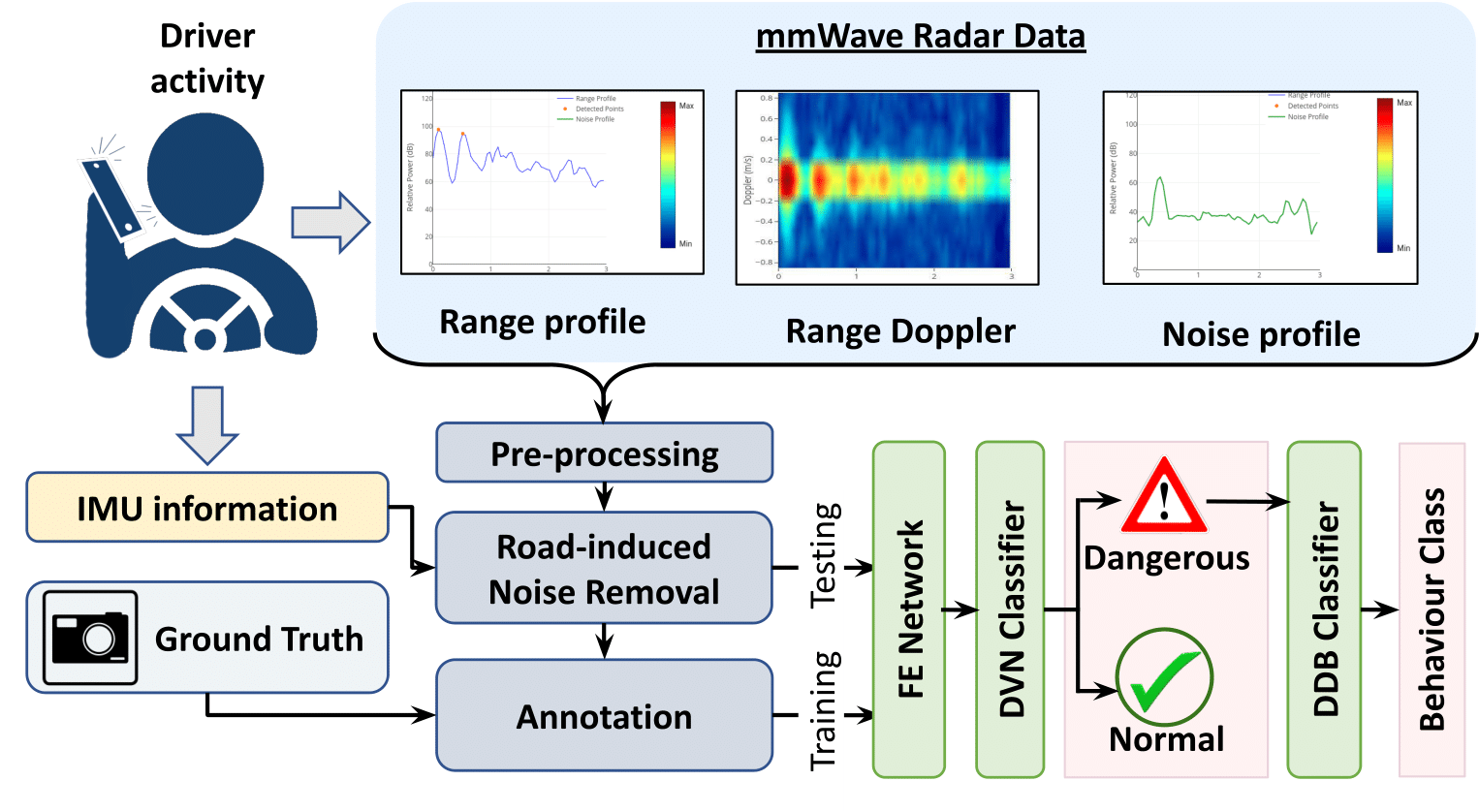

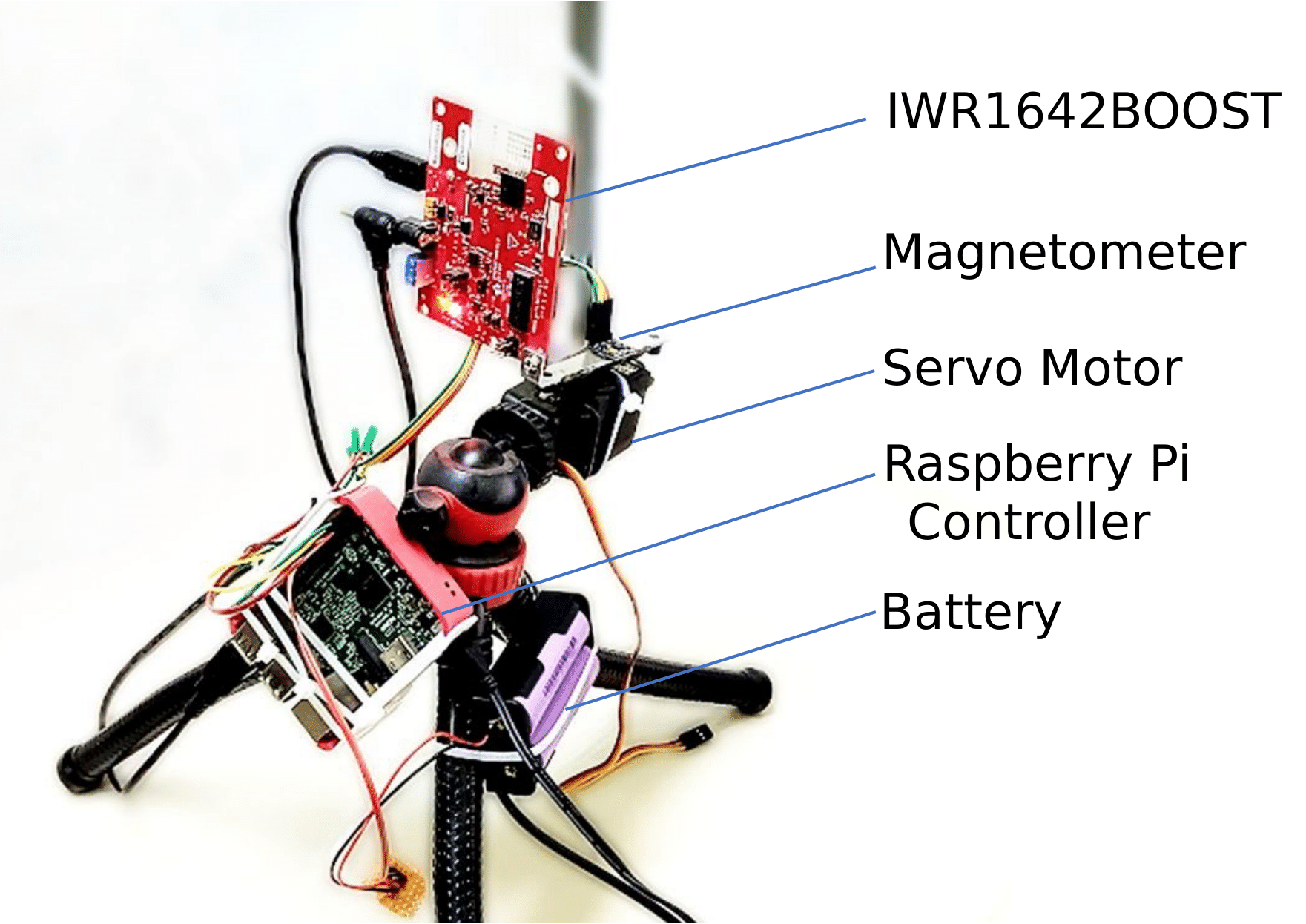

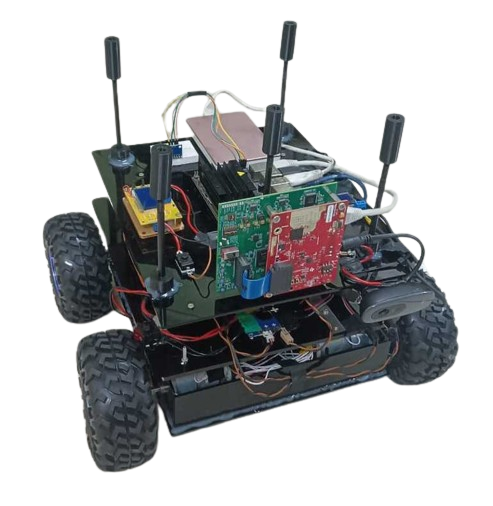

Imagine living in an intuitive interactive space without a need to understand the grammar of interaction with that space. One doesn't need to interact in a specific way or use voice commands or always wear something. This intelligent space can be shared with others without degrading the user experience of interaction. Interestingly, this vision of seamless smart spaces is not novel and quite dated; however, we are yet to occupy this kind of space regularly. For this vision to become an everyday reality, we are working on developing passive sensing systems, primarily using RF (such as mmWave), to monitor not only the humans, but also the environment and the context of interactions. To realize this with an application, we developed a passive driving behavior monitoring device using mmWave sensing. We are also working on developing platforms for continuous monitoring of multi-user activities at different scale (ADLs, IADLs, excericses, etc.) using such passive sensing architecture.

Smart Transportation: Sensing the Road, the Vehicle, and the Driver

Road travel in developing countries, particularly in the Indian subcontinent, is very sporadic because of multiple socio-economic factors. The roads are bumpy in many places; infrastructure is not very good, the streets are congested due to heavy traffic, and so on. We develop pervasive sensing modalities to sense the road, the transport infrastructure, and the driver. One critical issue is understanding various points of interest (PoIs) on the road, which affect travel. We use smartphone-based crowdsensing, leveraging the embedded sensors on today's smartphones like IMU, GPS, etc., to capture various PoIs and tag them over the map. another crucial issues is monitoring a driver's driving behavior and how the driver interacts with various landmarks on the road, like speed breakers, potholes, turns, etc. We also aim to understand the driving behavior and its impact and interactions on different maneuvers taken by the driver. Collectively, we target the lifestyle of citizens on the road to develop assistive technologies to support them during their daily commute.

Human Sensing: Activity Recognition and Annotation

Human activity recognition (HAR) has been one of the essential building blocks behind various pervasive applications. We are working on developing lightweight and cost-effective approaches for HAR, starting from macro-activities, like walking, running, writing on a board, meeting group detection, etc., to micro and fine-grained activities like cooking in a smart-home scenario. We use various modalities, like IMU, acoustic, RF, etc., for inferring the activities. Another area of our prime focus is activity annotation. The typical HAR models work in a supervised environment; therefore, they need a massive amount of labeled data to train the models. The question is, how do we label or annotate this data? We work to develop a robust and automated approach to use auxiliary sensing modalities, like acoustic, to label the IMU data for activity recognition. This is a challenging problem as the method does not have any prior knowledge; therefore, it needs to work in an unsupervised way. We also work on understanding the granularity and informativeness of these labels and how the generated labels can contribute to the development of large-scale activity recognition models.