Computer Human Interaction

The prime focus of our lab is to develop pervasive and ubiquitous systems for computer-human interactions. Towards this goal, we use interactive sensing modalities, like video, audio, RF sensing, smartphone-based sensing, smart wearables, etc., to design assistive systems for the common masses, particularly for the regions of underdeveloped and developing countries. Some of our current projects are as follows.

Smart Interface for Virtual Meeting Apps

For over two decades, video conferencing has been a productive approach for exchanging conversations between multiple participants through a digital online mode. During the COVID-19 pandemic and beyond, it became a necessity rather than an option when almost every meeting, be it a classroom teaching or a business meeting, is being conducted in the virtual mode through various online video conferencing platforms. Nevertheless, there has been a serious concern about these meetings' quality due to the lack of engagement from the participants, particularly in the business meetings or the classroom teachings, educational seminars, etc. Many participants tend to be passive during the sessions, mainly when they find other more exciting activities, like reading a storybook or an article over the Internet or browsing through their social networking feeds. Consequently, attending the meeting becomes merely a proof of participation, like giving the class attendance while not following the lectures!

We work on developing intelligent interfaces for online meeting attendees to monitor their cognitive involvement in the meetings. We explore the behavioral patterns of individuals during online discussions and then use active and passive sensing modalities, like video, RF, acoustics, wearables, etc., to see whether the participant is cognitively involved in the meeting's discussions.

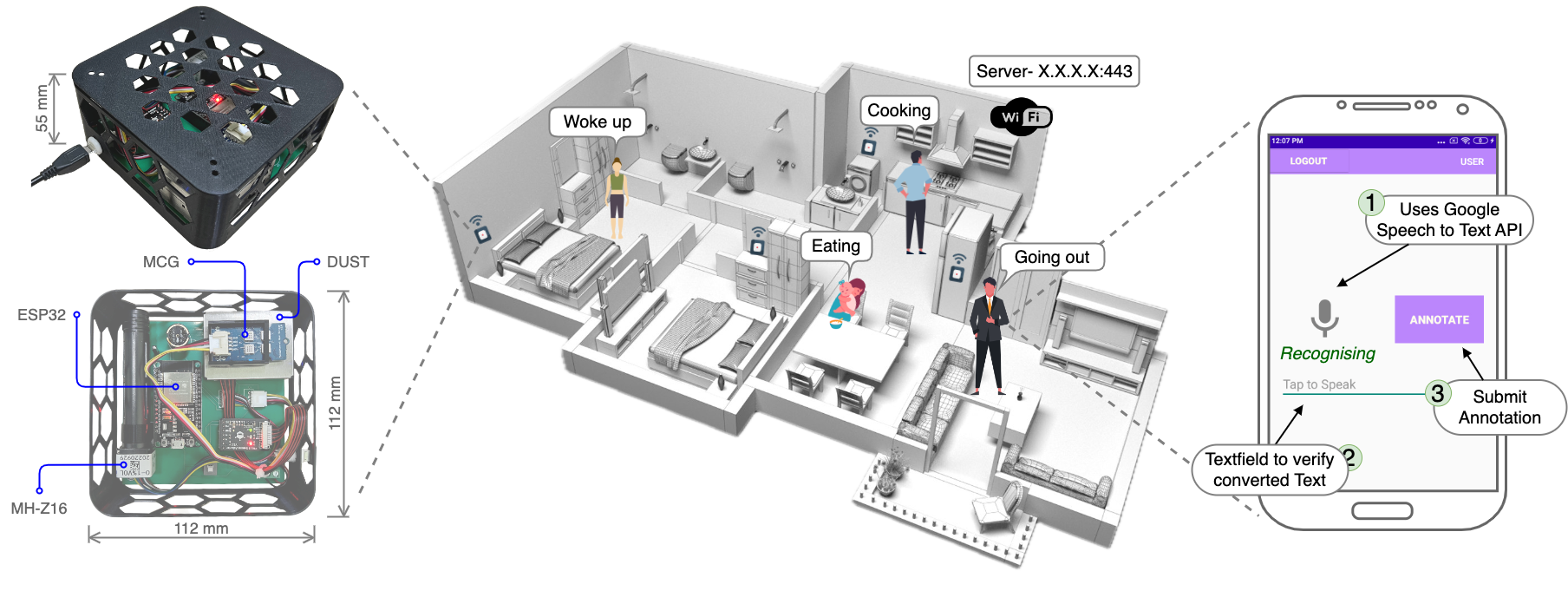

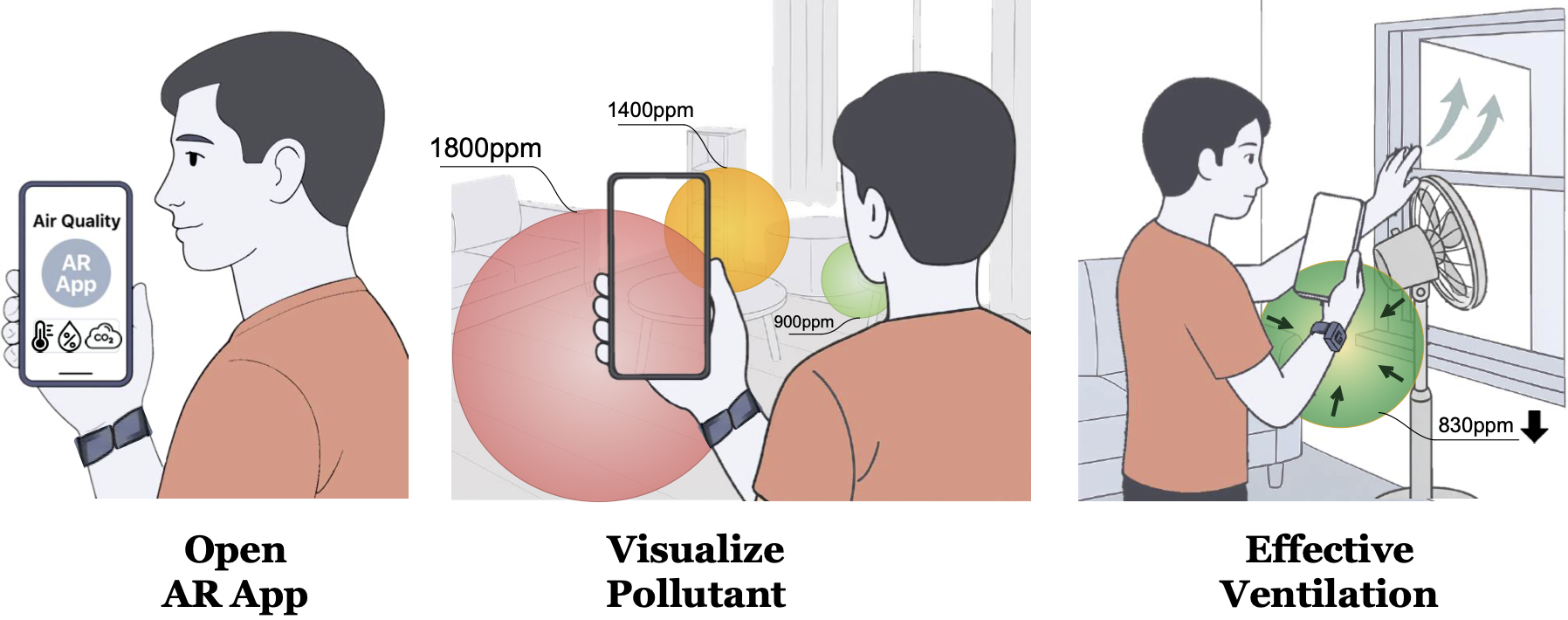

Sustainable Environment: Making the World a Smart Place to Live

Of late, we started working on sensing the environment. Six out of top 10 polluted cities in the world are from India. We aim to develop a low-cost, portable pollution monitoring device that can help individuals sense the environment for the presence of different pollutants in outdoor and indoor environments. We also work on designing an efficient mechanism for deciding the placement of pollution monitoring devices in an indoor setup, understand the impact of pollutants on the cognitive and behavioral aspects of humans, and understand the impact of several indoor activities on the pollution level of the room.

Smartphone Interfaces for Driving Safety Applications

Road safety is a primary concern these days as every year road accidents result in the loss of lakhs of lives and severe injuries to crores of people, with nearly 13 lakhs lives lost each year, and 90% of these fatalities occurring in developing countries. It is also stated by Youth for Road Safety (YOURS), more young people aged between 15-29 die from road crashes than from HIV/ AIDS, Malaria, Tuberculosis, or homicide, combined. In India itself, about eighty thousand people are killed in road crashes every year, which is thirteen percent of the total fatality all over the world. The man behind the wheel plays an important role in most crashes. In most cases, crashes occur due to carelessness or a lack of surrounding awareness of the driver. We developed a number of platforms and applications, primarily based on smartphone sensing, to harness and promote safe driving.

ZeCA: Zero-Configuration Alarms for Preventing Smartphone Usage during Driving

Sugandh Pargal,

Neha Dalmia,

Soumyajit Chatterjee,

Sandip Chakraborty

ACM JCSS 2024

IEEE MUST 2022 (in conjunction with IEEE MDM 2022)

ACM HotMobile 2022 (Posters)

Source Code: GitHub Repository

Teaser Video: Watch on YouTube

Mobile Interface Design for Challenged and Disabled Peoples

Today's smartphones are getting smarter; however, they might not be absolutely friendly for challenged and disabled people. For example, people with medical issues like dactylitis, sarcopenia, and joint pains might have difficulty typing using a smartphone's conventional QWERTY soft-keyboard. Existing gaze or voice-based approaches do not work well without commercial trackers or noisy environments. We develop intelligent interfaces to help such peoples interact with the smartphone seamlessly, using alternate modalities such as head gestures, visual cues, etc. This is particularly challenging as the method must be efficient enough to run over a smartphone. We develop lightweight tracking techniques by leveraging online learning to solve this problem.